一、简述

Cluster API是kubernetes的另一个开源项目,其主要作用是基于不同的云平台或虚拟化平台而创建的CRD资源,以定义kubernetes对象资源的方式来定义、使用和管理各个平台资源的一种新型方式。下面就个人使用情况做一个记录,这里基于AWS云平台。

二、准备工作

在使用cluster api之前需要做一些准备工作,详细安装过程这里先跳过。

1.准备一个kubernetes集群,作为manager cluster。

创建配置kubernetes集群的详细步骤此处先跳过。

2.安装必要的工具及相关配置:

1)kubectl

2)docker

3)clusterctl 目前下载最新版本

curl -L https://github.com/kubernetes-sigs/cluster-api/releases/download/v1.1.3/clusterctl-linux-amd64 -o clusterctl

4)clusterawsadm

下载地址:https://github.com/kubernetes-sigs/cluster-api-provider-aws/releases

此工具主要用来解决创建aws资源的IAM权限问题,使用clusterawsadm之前需要持有administrator权限,并配置如下环境变量:

AWS_REGIONAWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_SESSION_TOKEN(如果你使用的是多因子认证需要配置)

a.然后执行如下命令来创建相关的IAM资源:

clusterawsadm bootstrap iam create-cloudformation-stack

提示:其他额外的权限配置可参考 这里。

b.将上面AWS环境变量信息存储至kubernetes secret中

export AWS_B64ENCODED_CREDENTIALS=$(clusterawsadm bootstrap credentials encode-as-profile)

5)配置默认配置文件

默认配置文件位于:$HOME/.cluster-api/clusterctl.yaml,其中可以配置provider的众多变量,如下:

[root@ip-172-31-13-197 src]# clusterctl generate provider --infrastructure aws --describe

Name: aws

Type: InfrastructureProvider

URL: https://github.com/kubernetes-sigs/cluster-api-provider-aws/releases/

Version: v1.4.0

File: infrastructure-components.yaml

TargetNamespace: capa-system

Variables:

- AUTO_CONTROLLER_IDENTITY_CREATOR

- AWS_B64ENCODED_CREDENTIALS

- AWS_CONTROLLER_IAM_ROLE

- CAPA_EKS

- CAPA_EKS_ADD_ROLES

- CAPA_EKS_IAM

- CAPA_LOGLEVEL

- EVENT_BRIDGE_INSTANCE_STATE

- EXP_BOOTSTRAP_FORMAT_IGNITION

- EXP_EKS_FARGATE

- EXP_MACHINE_POOL

- K8S_CP_LABEL

Images:

- k8s.gcr.io/cluster-api-aws/cluster-api-aws-controller:v1.4.0

若想覆盖此配置,还可以配置Overrides Layer。

注意:

当同时也设置了相同名字的环境变量,环境变量具有更高的优先级。

三、初始化Manager Cluster

clusterctl初始化默认安装provider的最新可用版本(这里以AWS为例)。

[root@ip-172-31-13-197 customer]# clusterctl init --infrastructure aws --target-namespace capa

Fetching providers

Installing cert-manager Version="v1.5.3"

Waiting for cert-manager to be available...

Installing Provider="cluster-api" Version="v1.1.3" TargetNamespace="capa"

Installing Provider="bootstrap-kubeadm" Version="v1.1.3" TargetNamespace="capa"

Installing Provider="control-plane-kubeadm" Version="v1.1.3" TargetNamespace="capa"

I0417 09:20:04.840223 15472 request.go:665] Waited for 1.026955854s due to client-side throttling, not priority and fairness, request: GET:https://8AD3E49178C37D17AAE79D9114DD0D5F.gr7.us-east-1.eks.amazonaws.com/apis/controlplane.cluster.x-k8s.io/v1beta1?timeout=30s

Installing Provider="infrastructure-aws" Version="v1.4.0" TargetNamespace="capa"

Your management cluster has been initialized successfully!

You can now create your first workload cluster by running the following:

clusterctl generate cluster [name] --kubernetes-version [version] | kubectl apply -f -

如果报错如下:

[root@ip-172-31-13-197 customer]# clusterctl init --infrastructure aws --target-namespace capa

Fetching providers

Installing cert-manager Version="v1.5.3"

Error: failed to read "cert-manager.yaml" from provider's repository "cert-manager": failed to get GitHub release v1.5.3: rate limit for github api has been reached. Please wait one hour or get a personal API token and assign it to the GITHUB_TOKEN environment variable

点击 这里 可以获取GITHUB_TOKEN的值,然后通过配置环境变量GITHUB_TOKEN来解决此报错:

# export GITHUB_TOKEN=ghp_SHNvEyOYMHw040eMlPMOYLWxLtRFsC0J

四、创建workload cluster

1.通过clusterctl工具生成workload cluster清单文件

clusterctl generate cluster capa01 \

–kubernetes-version v1.21.1 \

–control-plane-machine-count=1 \

–worker-machine-count=1 \

–flavor machinepool \

–target-namespace mycluster \

> capa01.yaml

2.创建名称空间mycluster

kubectl create ns mycluster

3.创建workload cluster

kubectl apply -f capa01.yaml

4.查看control plane状态

此时control plane还没有就绪,如下:

# kubectl get kubeadmcontrolplane -A

NAMESPACE NAME CLUSTER INITIALIZED API SERVER AVAILABLE REPLICAS READY UPDATED UNAVAILABLE AGE VERSION

mycluster capa01-control-plane capa01 true 1 1 0 28m v1.21.1

5.获取workload capa01的kubeconfig信息到capa01.kubeconfig文件中

# clusterctl get kubeconfig capa01 > capa01.kubeconfig

6.通过kubeconfig文件为workload集群安装网络插件

这里默认是calica网络插件

# kubectl --kubeconfig=./capa01.kubeconfig apply -f https://docs.projectcalico.org/v3.21/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

7.再次查看control plane的状态

如下所示,已显示为ready

# kubectl get kubeadmcontrolplane -A

NAMESPACE NAME CLUSTER INITIALIZED API SERVER AVAILABLE REPLICAS READY UPDATED UNAVAILABLE AGE VERSION

mycluster capa01-control-plane capa01 true true 1 1 1 0 28m v1.21.1

此时可以查看集群信息,如node信息:

# kubectl --kubeconfig=./capa01.kubeconfig get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-213-197.ec2.internal Ready control-plane,master 20m v1.21.1

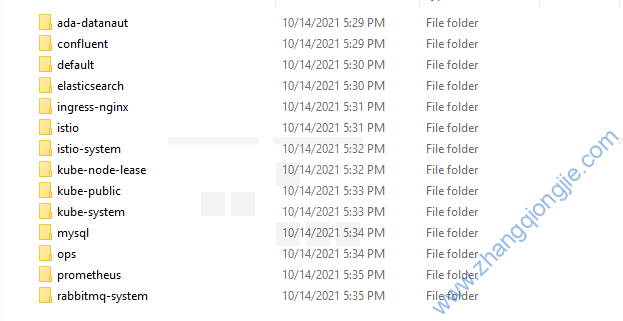

查看pod信息

# kubectl --kubeconfig=./capa01.kubeconfig get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-fd5d6b66f-w7wck 1/1 Running 0 2m29s

kube-system calico-node-gx246 1/1 Running 0 2m29s

kube-system coredns-558bd4d5db-66jz8 1/1 Running 0 21m

kube-system coredns-558bd4d5db-vxs82 1/1 Running 0 21m

kube-system etcd-ip-10-0-213-197.ec2.internal 1/1 Running 0 20m

kube-system kube-apiserver-ip-10-0-213-197.ec2.internal 1/1 Running 0 20m

kube-system kube-controller-manager-ip-10-0-213-197.ec2.internal 1/1 Running 0 20m

kube-system kube-proxy-4fd6b 1/1 Running 0 21m

kube-system kube-scheduler-ip-10-0-213-197.ec2.internal 1/1 Running 0 20m

五、清理资源

1.删除workload cluster

kubectl delete cluster capa01

2.删除manage cluster

clusterctl delete cluster

琼杰笔记

琼杰笔记

评论前必须登录!

注册